Orca-Math: Microsoft's Little AI Model That's a Quant Giant

Orca-Math uses a special technique to make it excel in math reasoning, outperforming models 10 times its size

At a Glance

- Microsoft research shows less can be more by training a mini AI model to excel at quantitative reasoning tasks.

Microsoft has unveiled a small language model that can solve math problems better than models 10 times its size.

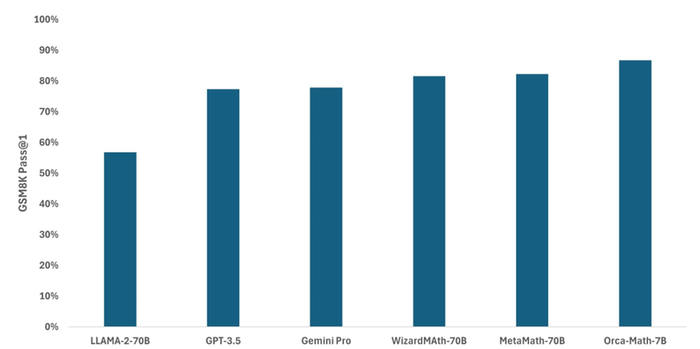

Orca-Math is just seven billion parameters but solves grade school math problems better than GPT 3.5, Gemini Pro and Llama 2 70B.

The model is a fine-tuned version of Mistral 7B that was trained on 200,000 synthetically generated math problems. While this dataset is smaller than others, Microsoft's research team argues that smaller models and datasets allow for faster and cheaper training.

Business use cases

Financial firms can apply AI models with math reasoning capabilities for portfolio optimization and risk modeling. Manufacturing firms could also use it for supply chain optimization and risk modeling – essentially it can be used anywhere a business requires quantitative problem-solving skills.

There are specific math models out there that could power these use cases, like WizardMath-70B and MetaMath-70B. But Microsoft’s new Orca-Math outperforms those despite being 10 times smaller.

Tested on the GSM8K benchmark, Orca-Math scored 86.81% on the pass@1 metric. The model outperformed larger systems like Llama 2 (56.8%), WizardMath-70B (81.6%) and even GPT-3.5 (77.4%).

Credit: Microsoft

Orca-Math also demonstrated strong performance on other math datasets like AddSub, MultiArith and SinglEq.

Part of Orca-Math’s success is the researchers' use of an iterative learning process in addition to fine-tuning. This is where the model is allowed to practice solving problems and continually improves based on feedback.

“For every problem, we allow the SLM to create multiple solutions. We then use the teacher model to provide feedback on the SLM solutions. If the SLM is unable to correctly solve the problem, even after multiple attempts, we use a solution provided by the teacher,” a research post reads.

“We use the teacher feedback to create preference data showing the SLM both good and bad solutions to the same problem, and then retrain the SLM.”

Model access

Microsoft researchers have been making strides in the growing small language model space. Prior to Orca-Math, they published the Phi line of models, the most recent being Phi-2, as well as the Orca series, which boasts enhanced reasoning capabilities.

To develop the dataset for Orca-Math, Microsoft’s researchers created a series of agent-based systems that collaboratively proposed methods for making math problems more difficult.

Repeating the process over multiple rounds led to increasingly complex math problems of which another agent was then used to determine if they were solvable and create the solution.

You can access the dataset used to build Orca-Math – it is available via Hugging Face and you can use it to fine-tune your commercial applications.

The Orca-Math model, however, has not been made available to the public. In the meantime, you can use the dataset to fine-tune an existing open source model, like Llama 2 or Bloomz, to improve their math reasoning skills.

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)