Meta Next-Gen AI Chips to Power Recommendations, Ranking

Meta unveils next-generation of its custom AI chips doubling performance to power recommendation models across Facebook, Instagram

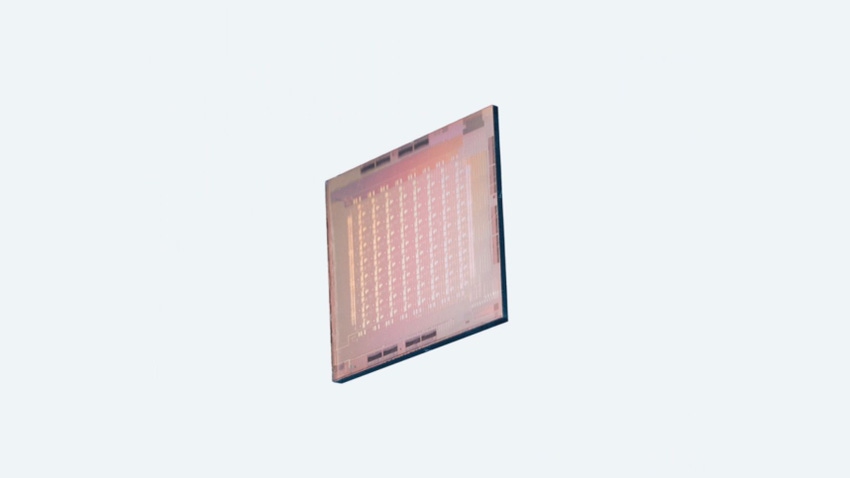

Meta has unveiled the next generation of its custom-built chips for AI workloads.

The Meta Training and Inference Accelerator (MTIA) v2 chips were designed in-house and offer double compute and memory bandwidth compared to its v1 chips.

The chips will be integrated into Meta’s data centers, powering workloads for its AI models, including deep learning recommendation models used to improve the user experience across the company’s apps and services.

Meta said the new chips can handle low and high complexity ranking and recommendation models, which power ads on Facebook and Instagram.

By controlling the full hardware and software stack, Meta claims it can achieve greater efficiency compared to commercially available GPUs.

“We are already seeing the positive results of this program as it’s allowing us to dedicate and invest in more compute power for our more intensive AI workloads,” according to a company post.

Meta unveiled its first in-house chip last May, which the company optimized for its internal workloads.

Meta’s attempts to develop its own chips come as the company is ramping up its AI efforts, requiring increased hardware.

The company recently showcased the AI infrastructure it is using to train its next generation of AI models, including Llama 3, but those housed only Nvidia hardware.

Omdia research put Meta as one of Nvidia’s largest clients last year, snapping up massive numbers of H100 GPUs to power its AI model training.

Rather than replace its Nvidia hardware, Meta said its custom silicon project will work in tandem with its existing infrastructure.

“Meeting our ambitions for our custom silicon means investing not only in compute silicon but also in memory bandwidth, networking and capacity, as well as other next-generation hardware systems,” Meta said.

The MTIA chips are poised for further expansion, as Meta aims to broaden the hardware's capabilities to include generative AI workloads.

“We’re only at the beginning of this journey,” Meta’s announcement concludes.

Meta’s unveiling of the MTIA v2 marks latest custom chip product, as big tech firms look to offer their own hardware. Just last week, Google Cloud unveiled its first Arm-based CPU at its Google Cloud Next 2024 event.

Microsoft has its Maia and Cobalt in-house CPUs while Amazon is powering generative AI applications with its AWS-designed Graviton and Trainium chip families.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)