The UK AI Safety Summit: What to Expect and First Impressions

October 31, 2023

On Nov. 1 to 2, 2023 the U.K. government is hosting a high-profile international AI Safety Summit, where participants from domestic and overseas governments, big tech, the research community and selected civic groups will examine the risks posed by highly advanced AI frontier models and what should be done to mitigate harmful impacts.

Making its presence felt

The purpose of this analysis is to consider the U.K. AI Safety Summit’s remit ahead of the event, to highlight weaknesses and omissions. But in a spirit of balance, we will attempt to identify positive elements. The summit’s focus on multilateral international collaboration is important as AI knows no boundaries and many (but not all) impacts operate at a global level or have ramifications that extend across borders and international supply chains. This is one of the reasons the U.K. invited China to participate in the AI Safety Summit, a move that has been criticized in some quarters but in Omdia’s view is a sensible thing to do, even if it turns out that China does not attend. China has deep AI expertise with big ambitions and excluding it from a conversation on AI risks and mitigations would be short-sighted.

U.K. Prime Minister Rishi Sunak is hoping the AI Safety Summit will position the U.K. as leading an international charge to mitigate risks from advanced AI. This may seem over-reaching for a medium-sized country in a challenging economic state that is no longer part of the European Union and is lagging on AI regulation compared to many other countries (e.g., China) and regions (e.g., the EU). There are also initiatives in motion from the UN, OECD and G7 nations that are already looking at multilateral frameworks for governing AI. The AI Safety Summit will not make the U.K./government head of the international AI table, but at least it is trying to get a seat at the table and make its presence felt.

Marks for effort

The five objectives for the U.K. AI Safety Summit are highly ambitious and include fostering international collaboration on frontier model research and safety, and identifying actions that individual organizations should take to improve AI safety. The U.K. AI Safety Summit alone is unlikely to achieve these goals and it is best viewed as a first step. The announcement of a new U.K. AI Safety Institute is an example of such a first step, as is Sunak’s goal to have summit participants get behind his proposal for an international body that will monitor AI frontier model developments. We also expect Sunak to announce a follow-on Safety Summits for 2024.

The summit has certainly succeeded in stimulating debate and shining a light on important issues. It has also spawned a wide range of parallel events in the U.K. under the umbrella of the AI Fringe. The AI Fringe has an important role to play as it is addressing issues that appear to be missing from the official summit agenda (e.g., a deep dive on AI and the environment) and is open to the public so has an inclusive, diverse remit missing from the U.K. AI Safety Summit.

The U.K. AI Safety Summit will ultimately be about exploration, identifying issues and suggesting processes rather than agreeing concrete actions or governance frameworks and milestones. Expecting otherwise is unrealistic. Multilateral collaboration and agreement at an international level is notoriously difficult. The EU AI Act illustrates this at a regional level. The European Union’s AI Act was first tabled in 2021 and has been dogged by disagreements, subject to multiple revisions and is still not completely finalized.

Aligning with the big tech narrative on advanced AI

The U.K. government has published a detailed paper to accompany its AI Safety Summit (Capabilities and risks from frontier AI). The paper offers a definition of frontier AI and the risk vectors that will be the focus of the summit. Frontier AI is described as “highly capable general-purpose AI models that can perform a wide variety of tasks and match or exceed the capabilities present in today’s most advanced models”. This is not a neutral starting point as it falls in line with how OpenAI (developer of ChatGPT) and other big tech firms are characterizing highly advanced AI systems, as per the recently formed Frontier Model Forum that was set up in July 2023 by Anthropic, Google, Microsoft, and OpenAI. This suggests that even before the summit begins the boundaries for exploring what advanced AI is and means has already been set in accordance with the big tech agenda.

The U.K. AI Safety Summit also appears aligned with the big tech’s assumption that the road to Artificial General Intelligence (AGI) is inevitable and desirable, despite the extreme long term risks it could pose. AGI is broadly defined as AI systems that can generalize “knowledge” and expertise gained through training and apply it to a variety of tasks (practical, intellectual) in different domains, achieving the same or even better performance than humans undertaking the same tasks. However, not everyone agrees that the race to AGI is inevitable or desirable. For example, Timnit Gebru, founder of the Distributed AI Research Institute (DAIR) argues that the imperative to build ever bigger, more powerful, capable AI systems is driven by the commercial interests of a few powerful corporations. To thinkers like Gebru, the AGI imperative is a form of tech determinism where managing risks essentially means making people adapt to technology rather than the other way around. The purpose here is not to debate the pros and cons of AGI but to point out how the U.K. AI Safety Summit should at least discuss alternative narratives on AI development trajectories and accommodate dissenting voices.

An oversize focus on longer-term extreme risks neglects the current harms caused by AI

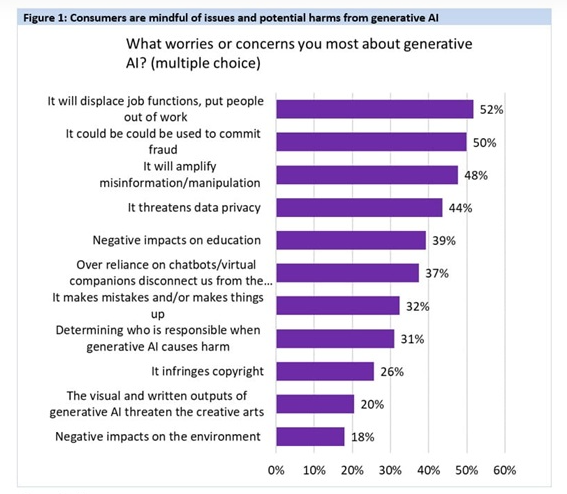

The U.K. AI Safety Summit agenda is focused on the longer-term extreme risks posed by highly advanced AI frontier models. This is a legitimate line of enquiry, but the summit should also focus on the immediate harms posed by AI. There is no doubt that current AI systems do inflict harm on multiple levels, as documented by researchers, scientists and academics, and as exposed by investigative journalists. This includes the way that biases in the data used to train LLMs can be reflected in the outputs, for example by locking in discrimination and other undesirable values that can compound inequalities when fed through to real world applications such as predictive policing. Synthetic media is an established tool for inflicting abuse and spreading misinformation. The new wave of generative AI (ChatGPT, Stable Diffusion etc.) is intensifying existing harms posed by AI (e.g. job displacement, undermining data privacy impacts, manipulation and misinformation at scale) and introducing new harm vectors (e.g. disrupting the education system, copyright infringement). These are not speculative issues that are only known to experts, but rather current harms that ordinary people are increasingly aware of, as shown by the findings from Omdia’s 2023 Consumer AI survey (see Figure 1). Survey respondents are notably worried about the potential of generative AI to disrupt jobs (52%), how it could be used to commit fraud (50%), to manipulate people and spread misinformation (48%), plus the negative impacts on data privacy (44%).

Credit: Omdia

Immediate harms and long-term risks should be considered together

Short- and long-term risks from AI are not mutually exclusive, as the former shapes the latter and how AI functions in the short term can affect what happens further down the line. For example, greater transparency into training data and model architectures has immediate and longer-term benefits. An immediate is to help make current applications based on algorithmic decision making more transparent and accountable (e.g. algorithmic judicial sentencing, job recruitment). In the longer term, the ability to see how and why models function can help determine why and how advanced LLMs could manifest emergent abilities.

We don’t have to wait for frontier models to trigger extreme risks

The U.K. government and indeed many others are working on the assumption that frontier models and AGI are manifestations of AI that will cause extreme risk and associated harms. But this is not necessarily the case. For example, current generative AI tools are already being used for manipulation and misinformation and this will continue, with the effects amplifying as synthetic media become more realistic and convincing with each successive generative AI model. Generative AI could be used as a tool to destabilize political process and regimes, leading to social breakdown and even conflict that could escalate into high-risk scenarios. A paper by Bucknall and Dori-Hacohen (Bucknall & Dori-Hacohen, 2023) gives an illustrative scenario where the use of synthetic media for prolonged, targeted misinformation about climate change erodes public support, interferes with research and curtails a government’s ability to enact policies, with disastrous consequences.

A wider more inclusive focus is needed

By now it should be clear that the U.K. AI Safety Summit has a fairly narrow remit. This has been emphasized in terms of how the summit is focused on long-term risks rather than immediate harms, and how the summit agenda seems to support a narrative largely defined by big tech. But there are other omissions that limit what the summit could achieve. The summit is prioritizing two core risk parameters: those that emanate from the misuse of frontier AI and risks from loss of human control over advanced AI. These are useful lines of investigation but there are other important risk vectors that need attention. For example, there is scant mention in the summit agenda or accompanying paper on how AI is impacting the environment. Current LLM foundation models and the even more powerful frontier models in development are compute intensive. This has negative implications for the environment in terms of energy consumption and carbon emissions. The summit agenda and accompanying paper also give the impression that harmful impacts on human rights from advanced AI are more of a secondary consideration.

The U.K. AI Safety Summit would benefit from being more diverse and inclusive in terms of who is taking part. The exact line up of participants has not been revealed but there does seem to be a focus on big tech and developed nations. It will be a loss if the conversation is dominated by big tech or by nations already in possession of advanced AI and/or the resources to develop it. It is also not clear to what extent different communities have been included. Inclusion and diversity are critical to a summit focused on AI risks and safety. What constitutes risks from AI, how risks are distributed, and the intensity of impact can vary widely by nation (e.g. impacts on developing countries) and communities (e.g. impacts on differently abled people, on different ethnic groups).

Read more about:

OmdiaYou May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)